Integrating Apache Airflow with Apache Ambari

Some time ago I was wondering why useful services that could be integrated with Apache Ambari didn’t have such integration. Inasmuch as I had had some experience with creating custom management packs for ambari and I had made a few management packs with custom services we use, I thought would be great to see Apache Airflow in ambari and have a possibility to manage it.

So I’ve made such management pack with airflow for ambari. The Mpack allows you to install/configure an airflow instance directly from ambari. Also, I committed it to GitHub, so you can find the Mpack on my GitHub page.

If you’re worried about it’s not typical installation, I’m going to calm your worrying. Airflow deployment process from ambari is the same process as from official airflow documentation. What does it mean? That means ambari installs airflow from pip repository, creates systemd scripts and start/stop airflow services with the systemd scripts. So ambari performs next operations:

Installation: pip install apache-airflow[all]==1.9.0 apache-airflow[celery]==1.9.0

Start/Stop (e.g. webserver): service airflow-webserver startAdvantages of the management pack are:

1. Installing airflow.

2. Managing airflow.

3. Checking services status.

4. Getting ambari alert when airflow web server service is unreachable.

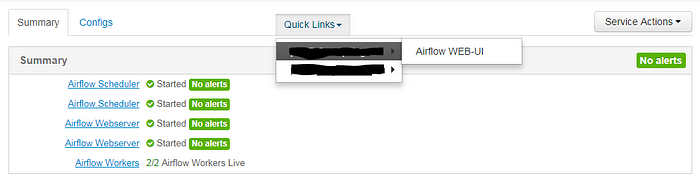

5. Using ambari “Quick Links” to access airflow web part.

So if you want to see the same in your ambari, let’s go ahead through the installation process.

Installing Apache Airflow management pack

Preparing ambari

First of all, you need to install the Mpack in ambari. How? Just download ‘airflow-service-mpack.tar.gz’ from GitHub page. Then log in to ambari server host by ssh and follow steps below:

- Stop Ambari server.

ambari-server stop2. Install the Apache Airflow management pack.

ambari-server install-mpack --mpack=airflow-service-mpack.tar.gz3. Start Ambari server.

ambari-server startDeploying Apache Airflow from ambari

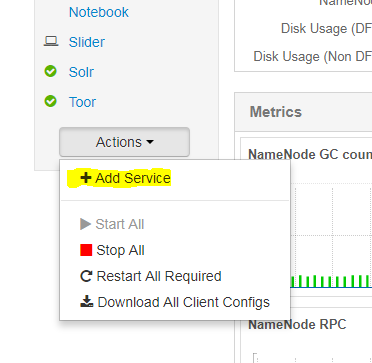

Now it’s as easy as deploying any other service available in HDP stack.

1. Action - Add service.

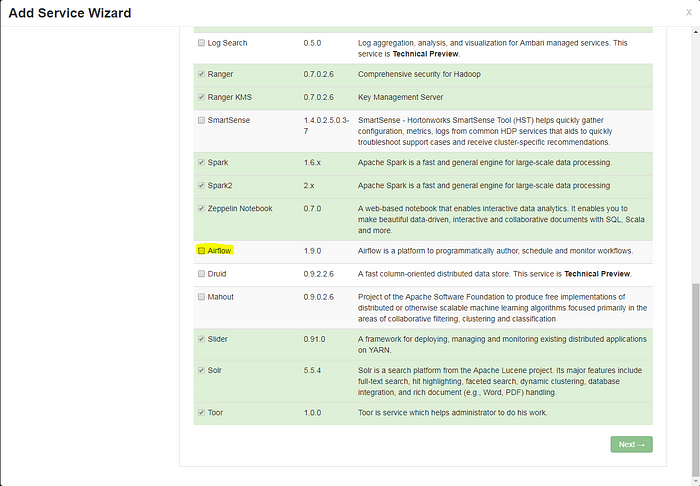

2. Select Apache Airflow service.

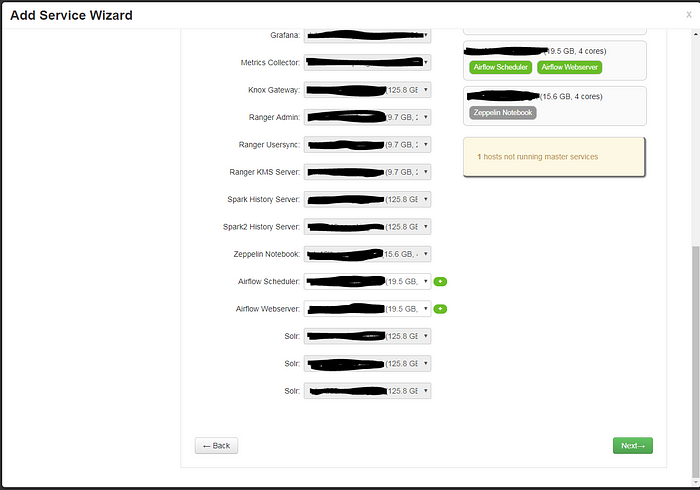

3. Choose destination server for web server service(s) and scheduler service(s).

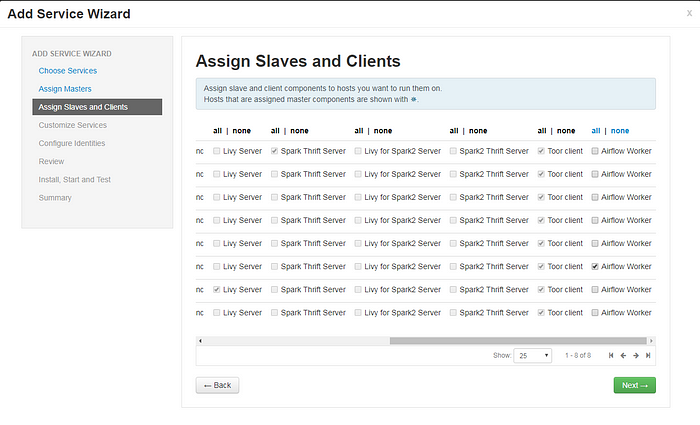

4. Choose destination server for worker service(s).

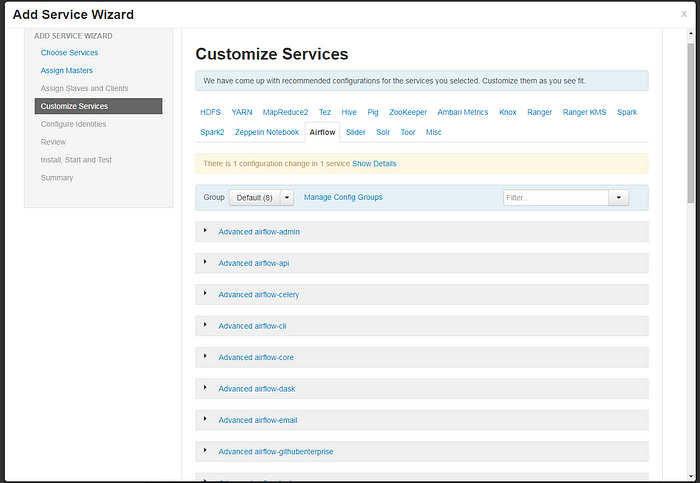

5. Then you may change airflow configuration.

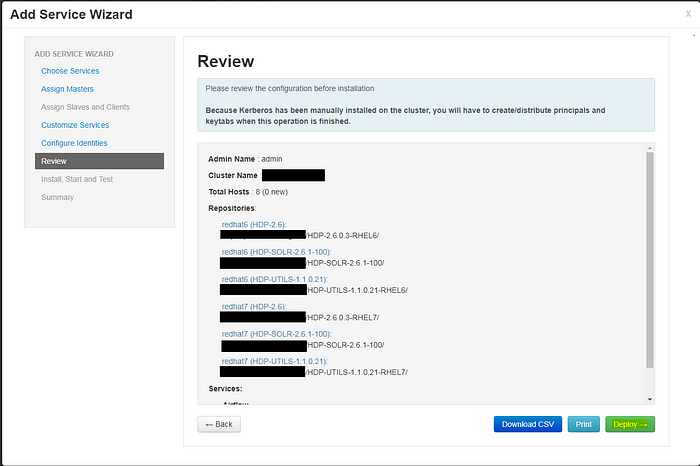

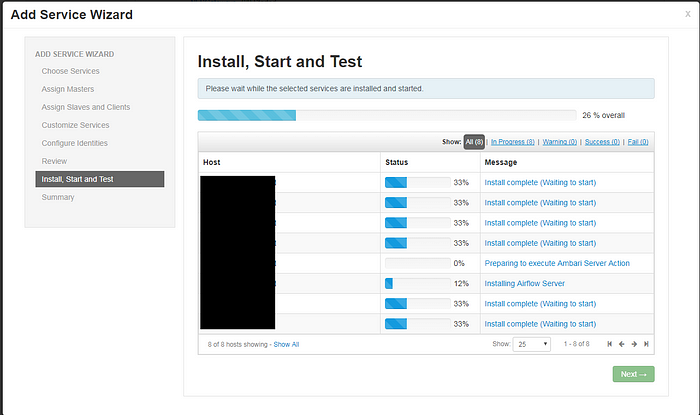

6. Deploy!

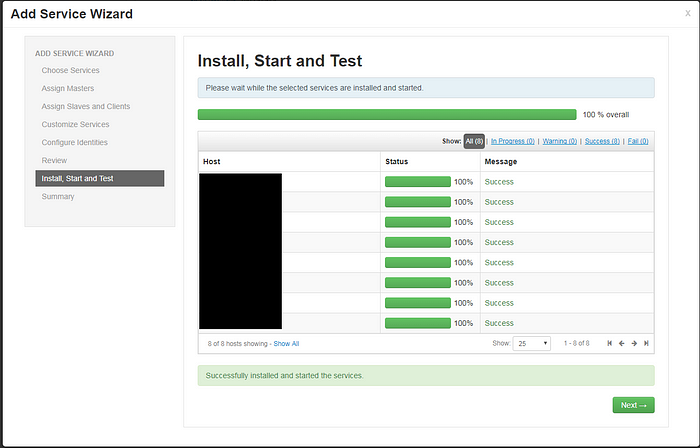

Finalizing installation

So, now you should be able to see airflow service in your ambari.

Virtual environment support

If you want to run apache airflow in a virtual environment, you should modify startup script “AIRFLOW_HOME/airflow_control.sh”.

For example:

P.S.

If you want to use airflow worker with celery executor and/or make your airflow HA, please read this awesome article written by WB Advanced Analytics as well.